In order for a graphics processor to produce high quality images in 3D, it has to deal with a huge amount of data. As the screen resolutions and details increased, the graphics processor have had to get faster and more efficient. One of the big bottlenecks for this process is the memory. The amount of memory required has also increased. Not only that, but the speed at which that data needs to move from video memory to the graphics processor also has to improve. The primary video graphics memory has been GDDR5. The problem is that this is now reaching its limits in terms of both power and speed. AMD and the JDEC have come up with a new solution they are calling HBM (High Bandwidth Memory). Find out how this could help improve your future PC graphics for both desktop and laptop systems.

In order for a graphics processor to produce high quality images in 3D, it has to deal with a huge amount of data. As the screen resolutions and details increased, the graphics processor have had to get faster and more efficient. One of the big bottlenecks for this process is the memory. The amount of memory required has also increased. Not only that, but the speed at which that data needs to move from video memory to the graphics processor also has to improve. The primary video graphics memory has been GDDR5. The problem is that this is now reaching its limits in terms of both power and speed. AMD and the JDEC have come up with a new solution they are calling HBM (High Bandwidth Memory). Find out how this could help improve your future PC graphics for both desktop and laptop systems.

Decreasing Speeds but Increasing Bus Width

When you talk about performance and memory, it is the total bandwidth that the memory can communicate with the system that matters. In the simplest terms, faster clock speeds mean that the memory can push more data to the processors. This has been the primary focus of designs over the years. The problems is that as you push speeds, power consumption and heat become increasing problems. Eventually you hit limits with current manufacturing technologies that reduce the ability to improve speeds.

The thing is that memory performance isn’t just about clock rates. There is also the width of the bus. You see, if you have a wider bus, you can push more data through the link between the memory and the processor. This is similar to having extra lanes on a freeway. The more lanes, the more traffic it can handle. In fact the total bandwidth is generally equated by multiplying the clock speed of the memory by the bud width in bits. Thus two video cards with the same speed memory (1GHz) with different widths (128-bit vs 256-bits) offer two very different bandwidths (128Gbps vs. 256Gbps).

High bandwidth memory looks to improve the performance of the memory by looking at the bus width part of the equation. Rather than increasing speeds, you can improve bandwidth by increasing the bus size. Most graphics cards right now have between a 128 to 384-bit wide bus. HBM proposes to increase the bud width to over 1024-bits wide. This dramatically increases the overall performance of the memory.

To help reduce the power consumption and heat problems of the high clock speeds, HBM looks to decrease the clock speeds of the memory. Rather than pushing memory upwards of 1000MHz, you reduce the clock speeds to around 500MHz. While you are halving the speed, the increase in the bus size more than makes up for the decrease in speeds. As the technology improves though, the speeds could theoretically be pushed back up for further speed increases.

Size Also Matters

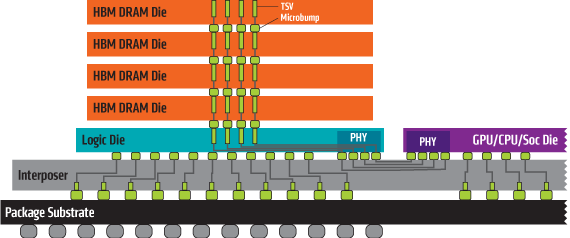

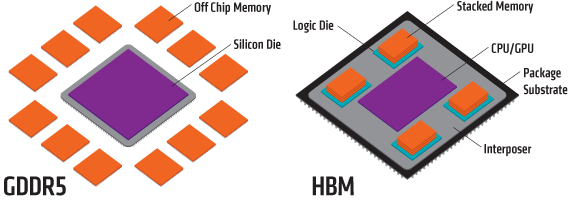

Another issue that the video memory for current computer systems is the size and space that they take up. Many desktop graphics cards continue to get longer and longer to fit the necessary video memory chips and their interconnects. HBM looks to reduce the overall footprint of the video solution by using a method that stacks layers of graphics memory on top of one another. In essence, you are stacking upwards of what would be four memory chips into the space of a single chip.

Another issue that the video memory for current computer systems is the size and space that they take up. Many desktop graphics cards continue to get longer and longer to fit the necessary video memory chips and their interconnects. HBM looks to reduce the overall footprint of the video solution by using a method that stacks layers of graphics memory on top of one another. In essence, you are stacking upwards of what would be four memory chips into the space of a single chip.

The main reason for doing this is that you reduce the length of your interface between the memory and the graphics processor. The shorter the distance, the easier it is to increase the density of the bus. The side effect is that it also takes up less space than the older solution. This is similar to the benefits that were seen by Samsung with its 3D NAND technology used in solid state drives that helped push up capacities and performance.

When Will HBM Make it to Market?

AMD is looking to release its first graphics processor to use HBM technology within this quarter (expect it by the end of Summer 2015). More than likely this will not be in their flagship performance graphics card but probably in a mid-range product. The goal is to improve performance while making things more efficient and cost effective. The most profitable segments of the graphics card market is actually in the mid to lower priced tiers rather than the most expensive and highest performance cards. Depending on how it performance and does in the market, expect the technology to eventually roll out to other graphics cards.

Now this technology is not going to be exclusive to AMD. While AMD developed it, it has been pushed by the JDEC group that develops memory standards. In addition to this, NVIDIA has also expressed interest in the technology. This means we will likely see the technology pushed out to many more products in the coming years.